2023 Georgia Scientific Computing Symposium (GSCS’23)

Georgia State University, Saturday, February 18, 2023.

Introduction

The Georgia Scientific Computing Symposium is a forum for professors, postdocs, graduate students and other researchers in Georgia to meet in an informal setting, to exchange ideas, and to highlight local scientific computing research. The symposium has been held every year since 2009 and is open to the entire research community.

This year, the symposium will be held on Saturday, February 18, 2023, in Student Center East Ballroom – Senate Salon at Georgia State University (66 Courtland Street SE. Atlanta, GA 30303).

Webinar Link:

Webinar Link: https://gsumeetings.webex.com/gsumeetings/j.php?MTID=m14624f53e69b7b20c6e857c41593d75cEvent Images

Organizers

Leslie Meadows, lmeadows2@gsu.edu, Georgia State University

Jun Kong, jkong@gsu.edu, Georgia State University

Invited Speakers

Pejman Sanaei, Department of Mathematics and Statistics, Georgia State University

Florian Schaefer, School of Computational Science and Engineering, Georgia Tech

Ruiwen Shu, Department of Mathematics, University of Georgia

Elizabeth Newman, Department of Mathematics, Emory University

Martin B. Short, Department of Mathematics, Georgia Tech

Schedule

Full schedule and abstracts can be downloaded here.

Saturday, February 18th, 2023

| Time | Speaker | Title |

| 8:00-9:00 | Registration, Poster Setup, Continental Breakfast, Discussion | |

| 9:00-9:15 | Opening Remarks (Dr. Sara Rosen, Dean of the College of Arts and Sciences in GSU) |

|

| 9:15-10:00 | Dr. Pejman Sanaei | Fluid structure interaction, from flight stability of wedges to tissue engineering and moving droplets on a filter surface |

| 10:00-10:45 | Dr. Florian Schaefer | Untangling computation |

| 10:45-11:00 | Coffee/Tea Break | |

| 11:00-12:00 | Student Lightening Talks (8~10 minutes) | |

| 12:00-12:30 | Panel Discussion on the Future of Scientific Computing | |

| 12:30-13:00 | Lunch | |

| 13:00-13:30 | Poster Session / Lunch (continues) | |

| 13:30-14:15 | Dr. Ruiwen Shu | Some recent results on the numerical methods for stiff kinetic equations |

| 14:15-15:00 | Dr. Elizabeth Newman | How to train better: exploiting the separability of deep neural networks |

| 15:00-16:00 | Student Lightening Talks (8~10 minutes) | |

| 16:00-16:30 | Poster Session | |

| 16:30-17:15 | Dr. Martin B. Short | A Bayesian method for making less socially-biased predictions/classification |

| 17:15-17:30 | Closing Remarks (Dr. Brian Blake, President of GSU) | |

| 18:00-21:00 | Dinner at a Nearby Restaurant - Hsu’s Gourmet Asian Cuisine (at your own cost) | |

Registration

Registration is free through February 11, 2023 at here.

Any question regarding registration may be addressed to the organizers above.

Invited Speaker Talks and Abstracts

Pejman Sanaei:

Title: Fluid structure interaction, from flight stability of wedges to tissue engineering and moving droplets on a filter surface

Abstract: In this talk, I will present 3 problems on fluid structure interaction: 1) Flight stability of wedges: Recent experiments have shown that cones of intermediate apex angles display orientational stability with apex leading in flight. Here we show in experiments and simulations that analogous results hold in the two-dimensional context of solid wedges or triangular prisms in planar flows at Reynolds numbers 100 to 1000. Slender wedges are statically unstable with apex leading and tend to flip over or tumble, and broad wedges oscillate or flutter due to dynamical instabilities, but those of apex half angles between about 40◦ and 55◦ maintain stable posture during flight. The existence of ‘‘Goldilocks’’ shapes that possess the ‘‘just right’’ angularity for flight stability is thus robust to dimensionality. 2) Tissue engineering: In a tissue-engineering scaffold pore lined with cells, nutrient-rich culture medium flows through the scaffold and cells proliferate. In this process, both environmental factors such as flow rate, shear stress, as well as cell properties have significant effects on tissue growth. Recent studies focused on effects of scaffold pore geometry on tissue growth, while in this work, we focus on the nutrient depletion and consumption rate by the cells, which cause a change in nutrient concentration of the feed and influence the growth of cells lined downstream.3) Moving droplets on a filter surface: Catalysts are an integral part of many chemical processes. They are usually made of a dense but porous material such as activated carbon or zeolites, which provides a large surface area. Liquids that are produced as a byproduct of a gas reaction at the catalyst site are transported to the surface of the porous material, slowing down transport of the gaseous reactants to the catalyst active site. One example of this is in a sulphur dioxide filter, which converts gaseous sulphur dioxide to liquid sulphuric acid. Such filters are used in power plants to remove the harmful sulphur dioxide that would otherwise contribute to acid rain. Understanding the dynamics of the liquid droplets in the gas channel in a device is critical in order to maintain performance and durability of the catalyst assembly. Our goal is to develop a mathematical model using the Immersed Boundary Method to quantify the droplet movement on the filter surface.

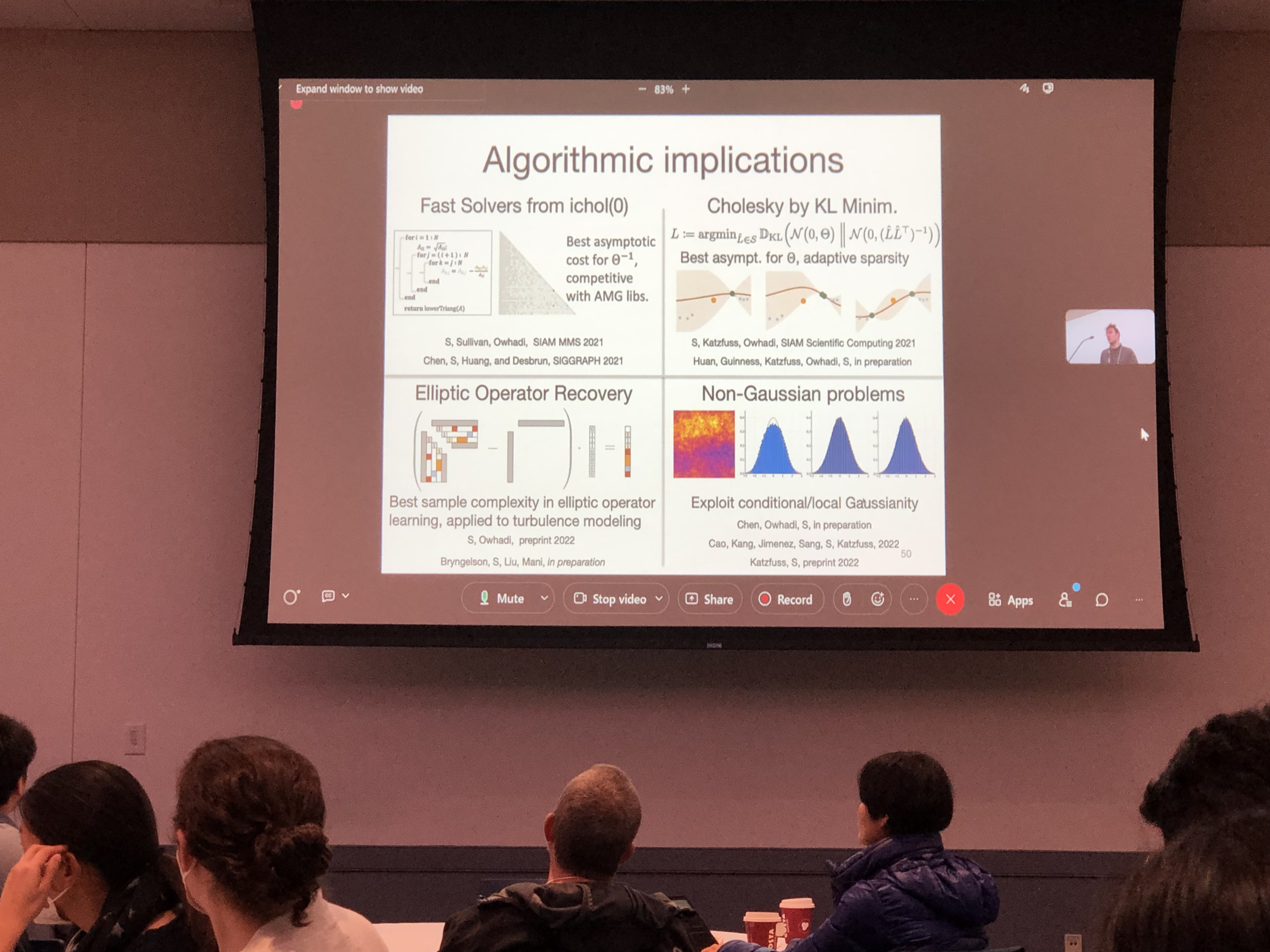

Florian Schaefer:

Title: Untangling computation

Abstract: Interactions of many degrees of freedom and effects on different scales pose challenges in computational science and engineering. This talk presents strategies for untangling computation, based on conditional independence properties of Gaussian processes and multi-agent games. The first part of the talk introduces the relationship between elliptic PDEs and smooth Gaussian processes. By relating the conditional independence properties of Gaussian processes to the sparsity of Cholesky factors of discretized PDE operators, we develop a suite of fast solvers that improve upon the state-of-the-art error-vs-complexity estimates for general elliptic PDEs. We then use these ideas to learn elliptic solution operators to accuracy $\epsilon$, using only $polylog(1/\epsilon)$ solution pairs. Finally, we apply our operator learning approach to identifying closure operators in computational fluid dynamics using the framework of the macroscopic forcing method. The second part of the talk investigates the use of multi-agent formulations to solve ill-conditioned or constrained optimization problems. It introduces competitive physics-informed networks (CPINNs), a modification of the popular PINN framework that casts the solution of a PDE as a zero-sum game. Optimizing the resulting game using competitive gradient descent improves PINN accuracy by multiple orders of magnitude. Time permitting, we conclude by illustrating connections between the topics of the two parts.

Ruiwen Shu:

Title: Some recent results on the numerical methods for stiff kinetic equations

Abstract: In this talk I will discuss numerical methods for stiff kinetic equations. Such equations, including the Boltzmann equation, the Landau equation and many related models, are very important in the study of rarefied gas dynamics and plasma physics. One major numerical difficulty is the stiffness originates from the possibly small Knudsen number. Asymptotic-preserving (AP) schemes are designed to overcome this difficulty, capturing the correct asymptotic limit without resolving the small scales. In the first part of my talk, I will discuss how to preserve the positivity of solutions while having the AP property with high order temporal accuracy. In the second part, I will discuss the order reduction issue of AP schemes in the intermediate regime.

Elizabeth Newman:

Title: How to train better: exploiting the separability of deep neural networks

Abstract: Deep neural networks (DNNs) have gained undeniable success as high-dimensional function approximators in countless applications. However, there is a significant hidden cost behind the success - the cost of training DNNs. Typically, the training problem is posed as a stochastic optimization problem with respect to the learnable DNN weights. With millions of weights, a non-convex and non-smooth objective function, and many hyperparameters to tune, solving the training problem well is no easy task. In this talk, we will make DNN training easier by exploiting the separability of common DNN architectures; that is, the weights of the final layer of the DNN are applied linearly. We will leverage this linearity in two ways. First, we will approximate the stochastic optimization problem deterministically via a sample average approximation. In this setting, we can eliminate the linear weights through variable projection (i.e., partial optimization). Second, in the stochastic optimization setting, we will consider a powerful iterative sampling approach to update the linear weights, which notably incorporates automatic regularization parameter selection methods. Throughout the talk, we will demonstrate the efficacy of these two approaches through numerical examples.

Martin Short:

Title: A Bayesian method for making less socially-biased predictions/classification

Abstract: Sophisticated numerical algorithms are increasingly a part of our everyday lives, and are sometimes used to make important decisions that have major effects on individuals. Because of this, many people are concerned with whether or not these algorithms seem to give "fair" results when applied to a variety of different individuals. Generally, "fairness" can have many definitions and interpretations, but is often related to whether or not the algorithms appear to bias results toward or against specific social groups. Such bias would almost certainly not be intentional, but rather arise naturally given the data set(s) and method at hand, and can only be avoided through careful algorithm design. In this talk I will discuss some existing methods for doing this, all of which are based on batch processing of data, and highlight some advantages and disadvantages. I will then describe an online, Bayesian method recently developed by myself and George Mohler, and highlight some advantages it has over the other methods within dynamic settings.

Schedule for lightening talks:

Lightening talk (11:00am~12:00pm)- Haniyeh Fattahpour, GSU: Effects of elasticity on cell proliferation in a tissue-engineering scffold pore

- Hwi Lee, GaTech: A second order accurate finite difference method on uniform rectangular grids for Maxwell’s equations around curved PEC

- Zhaiming Shen, UGA: Applying Kolmogorov Superposition Theorem to Break the Curse of Dimensionality

- Ho Law, GaTech: Surface Reconstruction Using Directional G-norm

- Mengyi Tang, GaTech: Weak formulation for Identifying Differential Equation using Narrow-fitting and Trimming

- Vladimir Bondarenko, GSU: Multiresonances and Memory in an Analog Hopfield Neural Network with Time Delays

- Shifan Zhao, Emory: GDA-AM: On the effectiveness of solving minimax optimization via Anderson Acceleration

- Ming-Jun Lai, UGA: A Compressed Sensing Based Least Squares Approach to Semi-supervised Local Cluster Extraction

- Emeka Peter Mazi, GSU: Mathematical modeling of erosion and deposition in porous media

- Bobby Hightower, GSU: Leaders, Followers, and Cheaters in Collective Cancer Invasion

- Conlain Kelly, GaTech: Hybrid physics-centric learning architectures for statistical continuum mechanics

Poster session

Poster sessions will be held during lunch and during the afternoon break. Anyone can present a poster, but we especially encourage undergraduate, graduate students and postdocs to use this opportunity to advertise their work. Please provide a poster title when you register. Posters should be set up by 9:30 am. We will also have two Lightening Talk sessions by students. In each session, each poster presenter will have 10 minute to present his/her poster.

Locations

Conference: Student Center - Senate Salon, 66 Courtland Street SE Atlanta, GA 30303

Parking: GSU Atlanta G Deck. Parking is free for all GSCS’23 participants. Directions here. Please park in G Deck for free using the Collins Street entrance (the one below the Courtland) from 7 a.m. – 10 p.m.

Public Transit: Marta Train Gold/Red Lines at Peachtree Center Station.

Dinner (at your own cost) - Hsu’s Gourmet Asian Cuisine.

Acknowledgements

The GSCS 2023 is supported by the Department of Mathematics and Statistics at Georgia State University and Georgia State University Research Foundation.

|